| Crunching the Numbers | December 20, 2009 |

In 2008, Jacoby Ellsbury was rated by Baseball America as the best defensive outfielder in Boston's minor league system. He made good on that prediction in 2008, impressing UZR and posting a 16.8 fielding RAA, 6.9 of which in centerfield.

In 2009, in the season Ellsbury was voted the Defensive Player of the Year by MLB.com, he was ranked by FanGraphs as having the worst defensive year of any center fielder, -18.6 runs. So which is it? Is he the best in the league or the worst in the league? Perhaps the MLB.com award was simply a popularity contest biased by a few web gems; there are a whole lot of Sox fans out there. That's possible, but I'm not quite willing to label Ellsbury the Derek Jeter of center field so quickly. Perhaps UZR isn't properly taking into account the peculiarities of Fenway? This isn't likely, given his rating in 2008 and that of Covelius in 2007.

It's been argued that Ellsbury is Jeter reborn: a poor defensive player who makes up for his deficiencies with flashy plays. The argument is that he makes poor reads off the bat and a poor initial step but makes up for it with his speed and a late diving catch. It's certainly possible, but looking over the video evidence, that's not my read. Some seem ready to dismiss Ellsbury on the basis of his UZR stat alone, but most fans don't seem to be so easily swayed. The fan scouting report over at The Book in 2008 and 2009 lists him as having an above average first step and average instincts (unlike Coco, who amusingly has incredible instincts, but the arm strength of a Girl Scout after a massive stroke).

On the other side, it's been argued that Ellsbury illustrates how meaningless defensive statistics are. No one has ever argued that defensive statistics are as definitive and meaningful as batting or pitching statistics, but to ignore them completely seems unwise. Theo Epstein seems to agree, given his moves to ship Mike Lowell--and his awful 2009 UZR--off to the Rangers while Boston pays his salary. I think the most honest observers have to admit that they just don't know how to reconcile the statistics with the widespread perception that Ellsbury is a good defensive player.

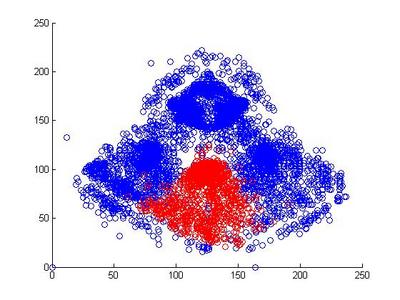

I looked at the pitch f/x data from 2008 and 2009 to get a sense of why Jacoby was being treated so poorly by UZR. Above is a plot of the 6500 plays at Fenway Park in which the x and y coordinates of the hit location was recorded. In red, I've highlighted all plays that involved the center fielder (each of these plays included the CF actually fielding the ball, either before or after it hit the turf. If the CF dove and missed, that isn't recorded by gameday). In each of the two years, we can look at the distribution of hits and outs that were fielded by all centerfielders playing in Fenway.

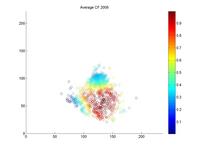

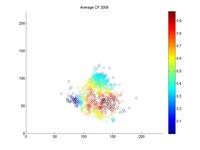

It's pretty hard to look to interpret these raw data, so I used a kernel density estimator to estimate the likelihood of each hit being turned into an out by either Ellsbury or the average CF playing in Fenway.

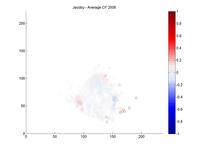

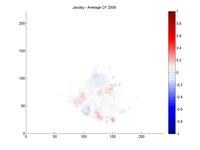

The blue circles in the scatter plots show hit locations from 2008 and 2009 that Ellsbury would have been less likely to have turned into outs; red circles show the locations Ellsbury would be more likely to turn into outs. Many of the circles are nearly white because there is no difference between Ellsbury and the average CF.

Gameday recorded a small minority of the actual hit locations, so it would be difficult to say anything conclusively even if there were strong patterns in the data (it's also unclear to me how many at-bats had zones recorded in the data that is used to calculate UZR. Retrosheet is missing hit locations for a vast majority of 2009 in Fenway). But it looks like Ellsbury didn't differ significantly from the average CF in 2008. In 2009, it looks like he may have been weaker at coming in on balls dropping in front of him, but there's no way to tell if that's pure noise. But what we absolutely don't see is the gaping hole you would expect to see in the range of the games worst centerfielder. Ellsbury seems to miss the plays that any CF would have missed, and seems to make the plays that any CF would have made. If I were a betting man, I would guess that Ellsbury's UZR will be league average next year.

Comments

Perhaps there's something about Fenway makes UZR more unpredictable there. Coco Crisp also saw wild swings in his UZR when he played CF for Boston: +1 to +22 to -15.

Posted by: Ken Arneson at December 20, 2009 5:31 PM

If I were a betting man, I would go with a slightly above average UZR next year. I see this year as an aberration and some bad luck. Another year of experience and some bounce back should help him out. It will be interesting to see what he does next season, especially with all the noise about trading him to San Diego.

Posted by: Ed at December 20, 2009 6:35 PM

I'm a Red Sox fan. I agree that he's probably around average in CF. Considering Ellsbury's great UZR numbers in LF/RF, UZR more or less agrees with him being average too.

This whole mini-controversy is pretty silly to me

Posted by: JB H at December 20, 2009 6:46 PM

Interesting stuff. Ironically, I just had this conversation with a buddy the other day about how I view Ellsbury as at least a league-average CF, but have trouble reconciling that with his UZR. I then mentioned that my biggest issue with him is balls dropping in front of him that a lot of CFs get. On the other hand, you almost never see the ball go over his head. Maybe he needs to play a step or two closer to the infield and take a bit more of a chance that he can catch up with the deep ball.

Posted by: Matt M at December 20, 2009 7:36 PM

Hey Chris, I'm having trouble with the orientation of the graphs. What's with the lack of points between the four regions of the first plot?

And where does your metric rank Ellsbury summing up all those points? Would you rather have Ellsbury or Cameron in center next year?

And what are the advantages to kernel smoothing over local regression? Or is it just a personal preference? Thanks.

Posted by: Jeremy Greenhouse at December 20, 2009 8:42 PM

You are aware that Gameday (not Pitch f/x, Pitch f/x has nothing to do with the hit locations given on Gameday) gives the location of where the ball is picked up and not where the ball lands? Given this the rest of your analysis is meaningless and also has the most unintelligible graphs I have ever seen.

Posted by: Peter Jensen at December 21, 2009 6:08 AM

Jeremy,

So homeplate is at the top. Its as if you were really really high in the CF bleachers. So left is on the right and vise versa. I think the gaps between the infield and outfield is caused by the infield players who catch the balls that would have dropped in that narrow band.

Re: who to put in CF, I don't know. There are too many variables. How will Ellsbury do in LF? Will he play well in front of the monster? Will he be able to play shallower because of his speed? Would Cameron be a better fit there? Cameron vs. righties is a poor sight. But Hermida would have to seriously bounce back in order to be more valuable, even in LF. I think I'd put Ellsbury in LF, play him really shallow, and make sure that when Hermida plays, he takes time away from Cameron facing righties.

A kernel density estimator is a probabilistic method. We ultimately want to know the probability of an out, given a hit location, P(O|L). We achieve that by using the KDE to estimate P(L|O). So for this purpose, the KDE makes the most sense. But for general fitting problems, a local regression is an interesting alternative.

Posted by: cdm at December 21, 2009 6:54 AM

Peter,

I disagree. If all you knew was the location of where the ball hit the ground, the only information you would be able to glean is the probability of producing an out for a ball that landed at that location. Thats the outcome, which is highly variable. What is more interesting is the process: how far did the fielder go to field the ball?

I didn't emphasize this because I there are no noticeable differences, but the location of the ball when the fielder made the play is at least as interesting as where the ball landed.

Consider a ball hit into a gap. It could have landed right behind the second baseman. So what? What matters is how quickly the fielder got to it, and that will be reflected in the location it was fielded. Did it get to the wall? Or was it cut off?

Consider a ball hit in front of the CF. We know what balls the CF can catch based simply on the fielded location of outs. The location of fielded balls that were not outs provide additional information. A CF with a longer range will have a wider circle in which he plays the ball, regardless of the outcome. It tells us something about the process, not the outcome. And Ellsbury's data implies no real difference in either outcome or process.

But all of that aside, didn't you use these data to create gameday-based fielding metrics here, here and here. You find these data meaningless?

Posted by: cdm at December 21, 2009 7:16 AM

Thanks. Any thoughts on outfield positioning in Fenway?

Posted by: Jeremy Greenhouse at December 21, 2009 10:54 AM

Peter,

That doesn't make the analysis meaningless at all. Are you suggesting that the location at which the CF fields a play provides no information about their range? That's not reasonable. The questions "How far can a player travel to catch a ball?" and "How far can a player travel to intercept and field a ball?" are two ways of evaluating the same latent variable. Do you mean to argue that one is more legitimate than the other?

Jeremy,

The more I look into it, the more i like LOWESS. It's clever to treat the distribution as a manifold and then compute the slope locally. Actually, I *really* like this: http://www.cs.cmu.edu/~kdeng/thesis/logistic.pdf

That is what we should be using: multi-category locally-weighted logistic regression (ch. 4.3.4).

Posted by: cdm at December 21, 2009 12:40 PM

I'm working on the SAFE (Spatial Aggregated Fielding Evaluation) with Shane Jensen at the Wharton school and we incorporate the likelihood of a fielder catching a ball in play into our calculations. Granted, we do not use a kernel like you (we use a probit model), but it is in the same essence. Our resulting estimates pin him as exactly average at centerfield in 2008 (about 0.02 runs saved above average over a season). Incorporate our 95% confidence interval (which is what I feel to be the biggest advantage of using a system like SAFE), we have him being anywhere between a +/- 10 run player.

My take from this is that in 2008, there were not enough observations (489 balls in play) for us to make a good prediction of his true ability. It is my belief that while UZR might be unbiased (i.e. on average, represents a player's true contribution in a season), it is hurt by its high variance of the true value.

One thing to note here is that we are estimating a fielder's true ability at preventing/allowing runs in the field, not necessarily their exact contribution that year.

In the end, after working with this SAFE stuff for awhile, I really do believe that defense is still largely overrated; not only because of over-estimates of its contribution to a team's performance, but, more importantly, the large amount of variation from season-to-season by defenders.

Posted by: James Piette at December 21, 2009 10:33 PM

Chris, cool. I've been using locally-weighted logistic regressions to find probabilities (strike zone and hit by pitches).

James, by overrated I assume you mean overrated by the sabermetric community? Why does the amount of variation from season to season matter? Do you mean there's a large amount of regression from season to season or that here are large error bars around a player's defensive projection from season to season? And if you don't mind my asking, how does SAFE rank Franklin Gutierrez?

Posted by: Jeremy Greenhouse at December 21, 2009 10:59 PM

You find these data meaningless?

Chris - I never said that I found the data meaningless. I said that the rest of your analysis in the article was meaningless. You cannot use a kernal density estimator or regression or any other method to calculate the probability of catching a ball on data that is mixed with locations where the ball actually landed (caught balls), and hits where the ball may have rolled there or rebounded back to that location after hitting the wall.

Consider a ball hit into a gap. It could have landed right behind the second baseman. So what? What matters is how quickly the fielder got to it, and that will be reflected in the location it was fielded. Did it get to the wall? Or was it cut off?

Yes, let's consider a ball hit into the gap. It would be helpful to know how quickly a fielder got to it and whether he cut it off. But you can't know either of these things from the Gameday data because it does give the time it took to get to the ball, you don't know whther the ball was fielded in that position before it got to the wall or after, you don't know whether the ball actually landed "right behind the second baseman" or whether it hit the wall in the air, and finally you don't know the position from where the fielder started. So really, what the hell are you talking about?

But all of that aside, didn't you use these data to create gameday-based fielding metrics here, here and here.

Yes, I did use Gameday data for my fielding metric. But if you had actually read the articles you cited you would know that I had to first normalize the data to minimize differences from park to park that were due to the out of scale field diagrams used for inputting the data. And I also specifically said that I had chosen to use a single large fielding zone for my metric and only use the angular measurments calculated from the Gameday data because Gameday only gave the location of where the ball was picked up and that was not sufficiently accurate for a more granular approach. The Gameday data can be very useful, just not in the way that you chose to use it.

Posted by: Peter Jensen at December 22, 2009 5:22 AM

Gameday recorded a small minority of the actual hit locations,

And what do you mean by this statement? In 2009 Gameday recorded hit locations for 99.3% of all the hit balls.

Posted by: Peter Jensen at December 22, 2009 5:46 AM

I do think that people are starting to walk away from the idea that defense is small contributor to run prevention in the sabermetrics community. Now, that is not to say if you put Adam Dunn in center, you're team won't be severely hurt by it, but I do mean to say the difference between Melky Cabrera and Jacoby Ellsbury last year is barely going to be one win, if any.

Amount of variation is very important. It is why people think so little of ERA nowadays. Even though it is the most appropriate statistic for actual, tangible performance of a player in a season, it is incredibly noisy and, thus, could be substantiated by luck.

I was not referring to projection, since UZR and SAFE are both measures of past performances. So, yes, you could say regression from season to season, but I meant more that it highly varies away from the mean or true UZR with each measurement.

In 2008, Franklin Gutierrez recorded a 9.22 SAFE value with a 95% CI of [3.32,15.80]. His SAFE value not only ranked him as the best right fielder that year, but his confidence interval put him in a small group of right fielders that were found to be statistically significantly different from 0. His '06 and '07 values were less impressive: 2.11 and -0.68.

Posted by: James Piette at December 22, 2009 8:58 AM

I am a user of Gameday who is also very aware of where it has shortcomings. I totally agree with Peter that you can really only use the vectors and not the distance in the outfield, as we can not tell from Gameday where the ball landed.

My Gameday based defense did show a large drop for Ellsbury. In 2006 he played only CF, saving 16 hits and 14 runs compared to average. In 2007, +8 runs in CF, +4 in RF & LF. 2008, +3 in CF, +18 in RF & LF. 2009, CF only, +0.2.

A lot of his run prevention comes from preventing the batters from stretching hits into doubles and triples, intsead of making outs of fly balls. This is not counted on all metrics (I'm not sure if UZR does or not).

Meanwhile, I measure Jason Bay as going from -11 runs in 2008 to +10 in 2009, the opposite direction from Ellsbury. Knowing that Gameday records which outfielder retrieved the ball, which we are using as a proxy for who had the best chance to field it, I wonder if there were more balls this past year that Bay was more responsible for fielding, but Ellsbury picked up after Bay failed to make the catch. Responsibility for these balls would be switched to Ellsbury instead of Bay. This is a guess, but I could test it by measuring Bay and Ellsbury in 2008 and 2009 in each vector between them.

Posted by: Brian Cartwright at December 22, 2009 5:25 PM

Brian - My BZM fielding metric does measure runs saved by preventing extra base hits. I have Ellsbury as saving 21 runs at the corners in 2008 and 11.6 runs in center, slightly less if I use a different measure which accounts for the advancement of the runners. I haven't completed analyzing 2009.

Posted by: Peter Jensen at December 22, 2009 6:04 PM

Peter, your articles and earlier ones by Dan Fox were large influences on my design.

As we both use Gameday for input, our observed plays made should be very much the same. The differences would most likely come on how we model the expected plays made, which includes what all we choose to use and not use. I do use minor league stats, but have not yet linked the hits table for vectors or parsed runner advancement (arms rating). I have completed through 2009.

Posted by: Brian Cartwright at December 22, 2009 7:22 PM

I think you need to examine how MLD.com and fangraphs make their rankings.

That would have the biggest impact on them having wildly different rankings.

Assuming they will share that info.

Posted by: shthar at December 24, 2009 7:28 PM

Sorry for the repetition. Peter, looking at the ratio of hits fielded at a location isn't meaningless, its just a different measure of the same underlying variable. Instead of "Percent of balls hit to a location fielded by a player", its "Percent of balls fielded by a player at a location turned into outs." Both are noisy measures of range. I think your criticism is uncharitable at best and unreasonable at worst.

You don't improve the quality of your data by removing information, even if you know that information is noisy. Without solid data (perhaps hit/FX), none of the defensive statistics (yours included) have much reliability. Hence wild variability in the scores.

Given the lack of reliability, what you should always do when you find highly contradictory measurements of the same latent variable is look into the raw data to see if there are any confounding causes. The KDE isn't an analysis; I presented no statistics. It is a glorified histogram for visualizing raw data. This descriptive account of the raw pattern of data led to the conclusion that there was no obvious pattern in these data to support Ellsbury's UZR rating.

Posted by: cdm at December 26, 2009 8:54 AM

I'm not as expert as any of you on fielding metrics; and I can't claim to fully understand the graphs.

But it seems to be that the measurement system (where a player touches a ball, whether or not it has already touched the ground) is a pretty raw statistic to draw conclusions from -- and much more so for an outfielder than an infielder. It seems to me that infielders deal with more consistent batted balls: grounders and pop-ups 90% of the time, with only the occasional liner or lazy fly. Outfielders touch a much wider variety of batted balls: grounders through the infield, lazy fly balls right at them, screamers off the wall, line-drive singles up the middle, lazy flies that come down in the gap, liners in the gap. Maybe I'm missing something, but unless you know what kind of ball a fielder touched, doesn't that make the data pretty meaningless?

Posted by: GreggB at December 29, 2009 4:35 AM